Education

- Cornell University – College of Arts & Sciences/Bowers College of Computing and Information Science

- Relevant Coursework: Machine Learning, Computer Vision, AI Reasoning & Decision, Database Systems, Data Structures & Object-Oriented Programming, Computer System Organization, Functional Programming, Discrete Structures, and other good stuff

Technical Skills

- Languages: Python, Java, SQL, OCaml, C#, C++, JavaScript

- Frameworks/Libs: PyTorch, TensorFlow, scikit-learn, pandas, NumPy, Matplotlib, OpenCV, Jupyter, DeepFace, LangChain, OpenAI, REST APIs

- Tools: Git, Tableau, Stata, Figma, VS Code, Unity (C#/AR), LaTeX

Professional Experience

Cornell Tech – Undergraduate Researcher (Jun 2025 – Aug 2025)

- ML/VLM applications in emotion detection and regulation

ATLAS Institute – Software Dev Intern (May 2024 – Aug 2024)

- Human AI interaction research

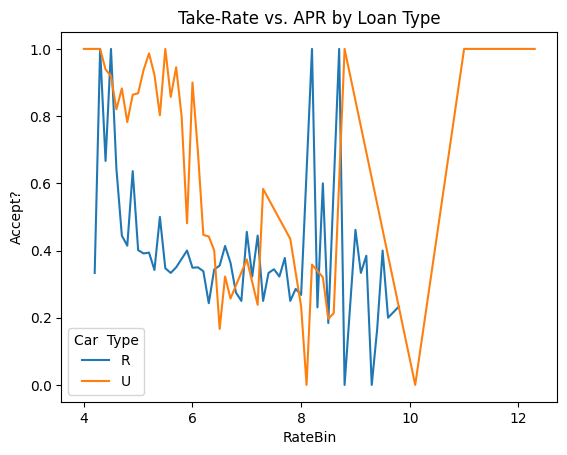

Platt Park Capital – Data Intern (May 2023 – Aug 2023)

- Healthcare and multi-site consumer

University of Colorado – Computational Chemistry Aide (Oct 2021 – Aug 2022)

- Theoretical chemistry